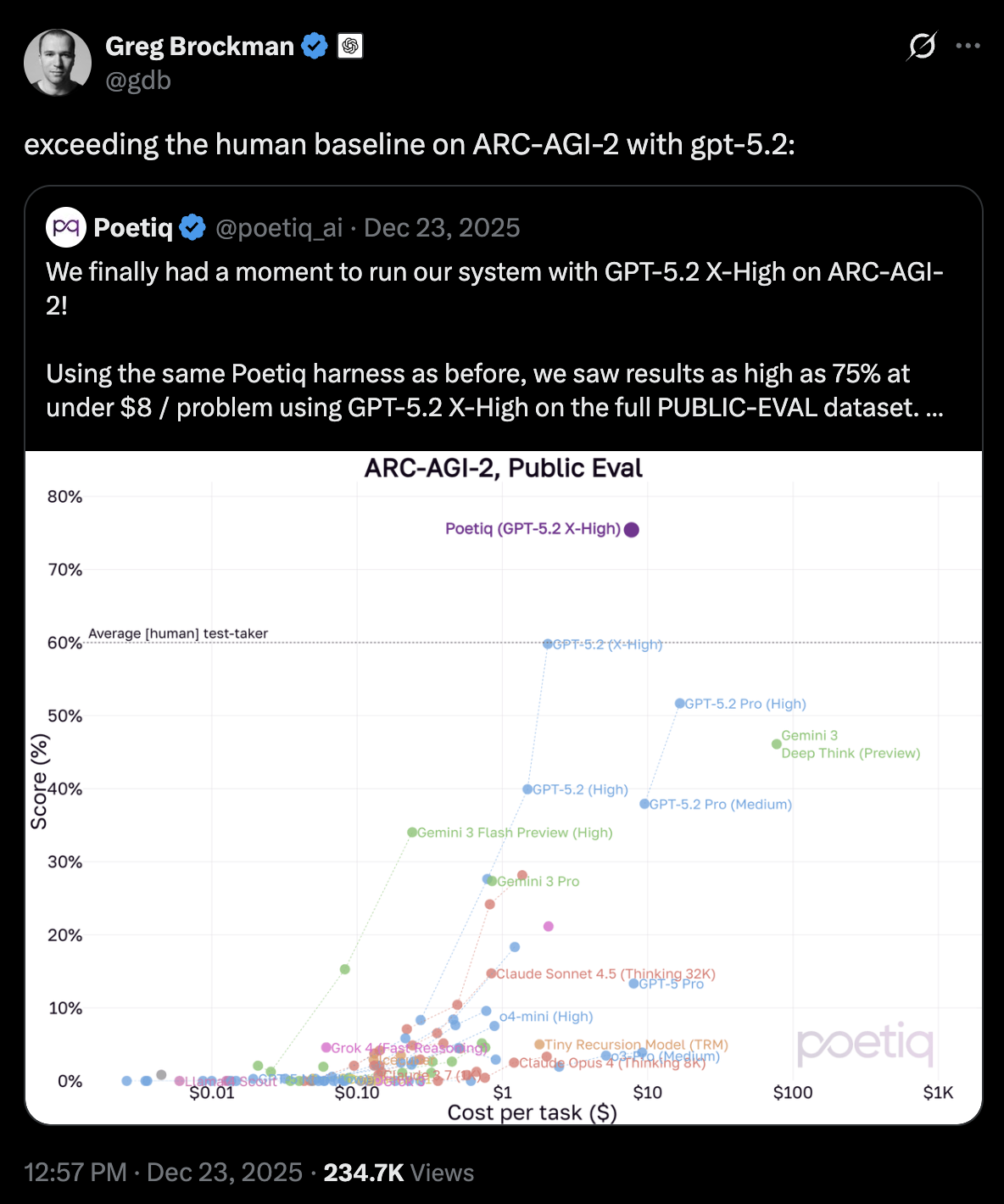

When Poetiq posted their results on ARC-AGI-2 in early December, Reddit and X lit up. A six-person startup had just beaten Gemini 3 Deep Think on one of AI's toughest benchmarks - at half the cost per task. ARC-AGI (Abstraction and Reasoning Corpus) measures something most AI systems struggle with: the ability to solve novel problems the way humans do, using logic and generalization rather than memorized patterns. Days later, when OpenAI released GPT-5.2, Poetiq immediately incorporated it and jumped to 75% accuracy, a 16 percentage point improvement over the previous leader. OpenAI Co-Founder & President Greg Brockman took notice:

Today, Poetiq announced $45.8M in seed funding co-led by FYRFLY Venture Partners and Surface Ventures, with Y Combinator, 468 Capital, Operator Collective, Hico Ventures, and Neuron Venture Partners participating.

The Company

Poetiq is a meta-system that sits on top of any LLM - Open AI’s GPT, Anthropic’s Claude, Google’s Gemini, Meta’s Llama, etc. - and makes it better at solving hard problems. Instead of requiring thousands or millions of examples for fine-tuning, clients provide Poetiq with a problem and a few hundred examples. The system generates a specialized agent that gets more accurate and cost-efficient with each iteration through recursive self-improvement.

Why You Should Pay Attention

As Co-CEO Shumeet Baluja puts it: “LLMs are impressive databases that encode a vast amount of humanity’s collective knowledge. They are simply not the best tools for deep reasoning.” While LLMs capabilities are improving with impressive speed, including in reasoning, pre-training and post-training through reinforcement learning takes an extraordinary amount of capital. Only the biggest companies can afford it. Meanwhile, most real-world business problems that could benefit from AI remain too hard or too expensive for LLMs alone to solve. That MIT study from August 2025 reinforces the lack of ROI for enterprise so far.

Poetiq's approach is different. Within six months of launching, they topped the ARC-AGI-2 benchmark, which measures how well AI systems can generalize problem-solving skills the way humans do. The Abstraction and Reasoning Corpus was specifically designed to test for "human-like generalization" rather than pattern memorization - exactly the capability enterprises need for complex business problems.

The Details

The platform uses recursive self-improvement instead of reinforcement learning. Here's why that matters: RL requires hundreds of thousands or millions of examples and weeks of training time. Poetiq examines a few hundred examples, figures out how to build an agent that's better at solving those types of problems, and deploys in hours or days instead of weeks.

These agents use LLMs to solve problems but don't require any retraining. They can employ various reasoning strategies - iterative problem-solving, self-auditing, backtracking - and get smarter with each problem they tackle. For the ARC-AGI benchmark, Poetiq produced specialized agents in hours, not weeks. The system adapts to each LLM's quirks and figures out how to best extract and synthesize the information each contains.

The beauty of this approach is that Poetiq doesn't compete with frontier models - it enhances them. Any combination of LLMs, any native AI platform, any AI use case. Companies can use it to improve their proprietary in-house models or get better performance from commercial ones. As Poetiq puts it: they're building intelligence on top of LLMs, not into them, creating a complete intelligent ecosystem that harnesses what LLMs contain for better reasoning and problem-solving.

For enterprise customers, the use cases are the AI implementations that haven't generated ROI yet. Complex workflows that require actual reasoning, not just pattern matching. Problems where fine-tuning would require massive datasets and weeks of compute time. Situations where the cost of RL post-training makes the business case fall apart. Domains outside of coding and math where generating synthetic data for RL training is impractical.

Why We're Invested

Co-CEOs Shumeet Baluja and Ian Fischer spent 21 and 10+ years respectively at Google /DeepMind, where they noticed frontier LLMs were struggling with most hard problems (and plenty of easy ones). Baluja founded Google’s mobile practice and computer vision research group, contributed to 170+ patents, and helped create YouTube's copyright system. Fischer joined Google / DeepMind when his company Apportable was acquired in 2015, where he'd been co-founder and CTO.

The team of six managed to establish a new benchmark record within their first six months. Rather than compete against frontier models, they found a way to coax more intelligence from every LLM available - positioning Poetiq as essential infrastructure for companies trying to make AI work for real-world business applications. Their technical approach using recursive self-improvement rather than expensive RL post-training demonstrates a fundamentally different path forward. And because they work across all LLMs, they're not picking sides in the model wars.

In a market where the economics of AI face real questions, Poetiq offers a way forward: better reasoning capabilities at a fraction of the cost, with dramatically less data and compute required.

What's Next

Poetiq is hiring AI scientists, engineers, and interns to build their meta-system into a more user-friendly product for enterprise customers. Their ideal clients have business problems that have been too difficult or too expensive for LLMs to solve, even with RL post-training. Visit poetiq.ai to learn more about the platform.

.png)